前言

之前做的项目用的都是openwrt系统,市场上应用了一段时间,普遍反馈作为一个HUB,其性能较低,所以要把HUB替换成DPDK,目前相对成熟的是VPP。目前基于其试得是hub—-cpe之间lan-lan的三层通信。

业务配置

拓扑

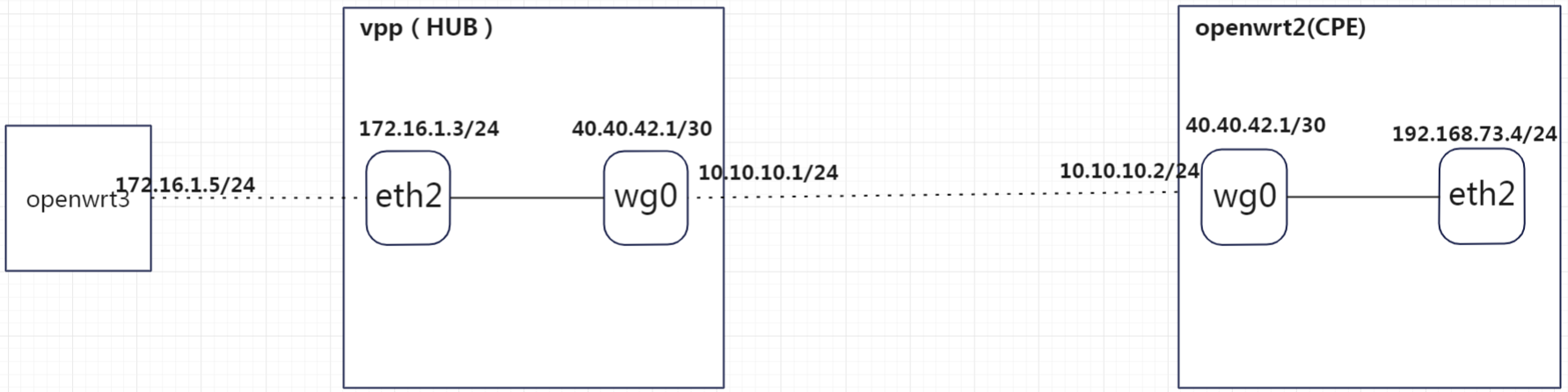

两台设备之间的wan之间直连。通过wan口建立wg隧道,通过配置路由,实现三层转发。

两台设备之间的wan之间直连。通过wan口建立wg隧道,通过配置路由,实现三层转发。

配置

HUB

- vpp插件配置如下:

插件配置文件:/etc/vpp/startup.conf

plugins {

## Adjusting the plugin path depending on where the VPP plugins are

# path /ws/vpp/build-root/install-vpp-native/vpp/lib/vpp_plugins

## Add additional directory to the plugin path

# add-path /tmp/vpp_plugins

## Disable all plugins by default and then selectively enable specific plugins

# plugin default { disable }

plugin dpdk_plugin.so { enable }

plugin acl_plugin.so { enable }

plugin linux_cp_plugin.so { enable }

plugin linux_nl_plugin.so { enable }

plugin wireguard_plugin.so { enable }

plugin ping_plugin.so { disable }

plugin igmp_plugin.so { disable }

plugin arping_plugin.so { disable }

## Enable all plugins by default and then selectively disable specific plugins

plugin vrrp_plugin.so { disable }

}

Linux-cp 是一个 VPP 插件,它创建镜像其 VPP 数据平面对应部分的 Linux 网络接口。一般模型是Linux作为网络栈,即具有控制平面协议,如ARP、IPv6 ND/MLD等,VPP数据平面提供基于软件的ASIC进行转发。关联的“linux_nl”插件侦听 Netlink 消息并同步配对接口的 IP 配置。 <

- 接口以及路由的配置如下:

## 打开镜像开关

sudo vppctl lcp lcp-sync on

## 配置wan口,对应的内核接口eth1

sudo vppctl lcp create GigabitEthernet3/0/0 host-if eth1

sudo ip link set dev eth1 up

sudo ip link set mtu 1500 dev eth1

sudo ip address add 10.10.10.1/24 dev eth1

## 配置wg隧道

sudo vppctl wireguard create listen-port 9999 private-key wNw3zMmL/MSvnlIZ+dBnJkHCD5gMEP1HS0cU5gHdhnM= src 10.10.10.1

sudo vppctl lcp create wg0 host-if wg0 tun

sudo vppctl wireguard peer add wg0 public-key rFHqtOHXmAlhat+xHk3XI1WpFy8CJv87S1XIPjDD1HA= allowed-ip 0.0.0.0/0 persistent-keepalive 25

sudo ip link set dev wg0 up

sudo ip link set mtu 1420 dev wg0

sudo ip address add 40.40.42.1/30 dev wg0

sudo ip route add 40.40.42.2/32 dev wg0

## 配置lan口, 对应的内核接口是eth2

sudo vppctl lcp create GigabitEthernet1b/0/0 host-if eth2

sudo ip link set dev eth2 up

sudo ip link set mtu 1500 dev eth2

sudo ip address add 172.16.1.3/24 dev eth2

## 配置到CPE设备LAN侧路由

sudo ip route add 192.168.73.0/24 via 40.40.41.2

CPE

CPE用的openwrt系统。模拟实际应用。配置如下:

##wan口配置

config interface 'wan'

option type 'ovs-bridge'

option proto 'static'

option ipaddr '10.10.10.2'

option netmask '255.255.255.0'

option gateway '10.10.10.1'

list ifname 'eth1'

## lan口配置

config interface 'seth2'

option type 'ovs-bridge'

option proto 'static'

option ipaddr '192.168.73.4'

option netmask '255.255.255.0'

list ifname 'eth2'

## wg隧道配置

config interface 'wg1'

option proto 'wireguard'

option private_key 'CCX+tFOKNPMQg2nhH/7PNGcCp6ycKC/JtX2Y2m4Rw1c='

list addresses '40.40.42.2/30'

config wireguard_wg1 'wgserver1'

option public_key 'bzbI5vzSogyEOqlQBeElu7A3kipdlI6NFGdMUzTnzWw='

option endpoint_host '10.10.10.1'

option endpoint_port '9999'

option route_allowed_ips '1'

option persistent_keepalive '25'

list allowed_ips '0.0.0.0/0'

## 配置到HUB的LAN侧路由

config route 'ooo'

option interface 'wg1'

option target '172.16.1.0'

option netmask '255.255.255.0'

option gateway '40.40.40.42.1'

在CPE设备上通过ping HUB LAN侧能通:

# ping 172.16.1.3 -I 192.168.73.4

PING 172.16.1.3 (172.16.1.3) from 192.168.73.4: 56 data bytes

64 bytes from 172.16.1.3: seq=0 ttl=64 time=0.418 ms

64 bytes from 172.16.1.3: seq=1 ttl=64 time=0.337 ms

64 bytes from 172.16.1.3: seq=2 ttl=64 time=0.373 ms

64 bytes from 172.16.1.3: seq=3 ttl=64 time=0.380 ms

64 bytes from 172.16.1.3: seq=4 ttl=64 time=0.368 ms

64 bytes from 172.16.1.3: seq=5 ttl=64 time=0.405 ms

64 bytes from 172.16.1.3: seq=6 ttl=64 time=0.904 ms

64 bytes from 172.16.1.3: seq=7 ttl=64 time=0.488 ms

64 bytes from 172.16.1.3: seq=8 ttl=64 time=0.378 ms

64 bytes from 172.16.1.3: seq=9 ttl=64 time=0.377 ms

64 bytes from 172.16.1.3: seq=10 ttl=64 time=0.349 ms

64 bytes from 172.16.1.3: seq=11 ttl=64 time=0.345 ms

64 bytes from 172.16.1.3: seq=12 ttl=64 time=0.374 ms

64 bytes from 172.16.1.3: seq=13 ttl=64 time=0.386 ms

64 bytes from 172.16.1.3: seq=14 ttl=64 time=0.338 ms

64 bytes from 172.16.1.3: seq=15 ttl=64 time=0.342 ms

64 bytes from 172.16.1.3: seq=16 ttl=64 time=0.344 ms

64 bytes from 172.16.1.3: seq=17 ttl=64 time=0.403 ms

64 bytes from 172.16.1.3: seq=18 ttl=64 time=0.649 ms

64 bytes from 172.16.1.3: seq=19 ttl=64 time=0.348 ms

64 bytes from 172.16.1.3: seq=20 ttl=64 time=0.359 ms

64 bytes from 172.16.1.3: seq=21 ttl=64 time=0.330 ms

64 bytes from 172.16.1.3: seq=22 ttl=64 time=0.359 ms

64 bytes from 172.16.1.3: seq=23 ttl=64 time=0.343 ms

^C

--- 172.16.1.3 ping statistics ---

24 packets transmitted, 24 packets received, 0% packet loss

round-trip min/avg/max = 0.330/0.404/0.904 ms

-

遗留问题

当在HUB侧配置的wg的allowd ips已经是 0.0.0.0/0, 但是wg隧道通了,但是隧道之间ping不通,通过抓包,发现hub上的wg接口有收到报文,也有发出,但是cpe上没有收到。但是HUB上配置一个vxlan+桥,隧道就能ping通了,二者对比转发表,发现少了一个到对端wg的明细路由,将明细路由加上就能通了。

不知道这是vpp的一个bug还是设计如此。 -

参考